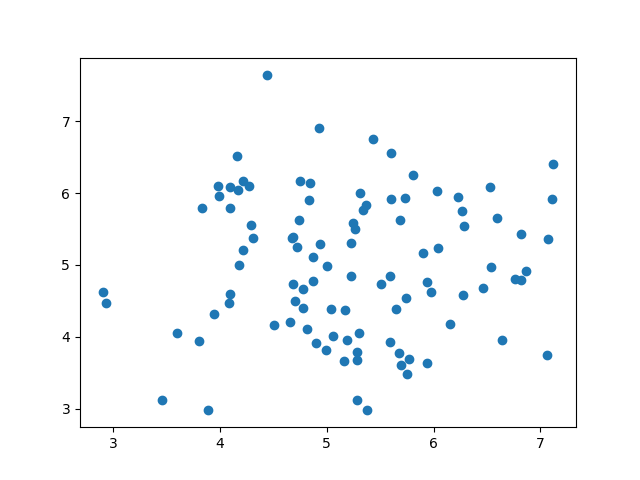

When the number of points N>10,000 the performance slows down, both while showing the figure window and while interacting with the plot.

#Python scatter plot with thousands of points code#

Plt.scatter(xedges,yedges,c=zedges,alpha=0.7,cmap=cm. I am using the using the code below to plot 3D data. When we have lots of data points to plot, this can run into the issue of. Hist,edges=np.histogramdd((delta,vf,dS), (xedges,yedges,zedges)) A scatter plot (aka scatter chart, scatter graph) uses dots to represent values. Actually Ive 3 vectors with the same dimension and I use to plot in the following way. A simple, but approximate solution is to use the location of a point in or on the bin edge itself as a proxy for the points in it: xedges=np.linspace(-10,10,100) Scatter plot with a huge amount of data Ask Question Asked 12 years, 8 months ago Modified 12 years, 6 months ago Viewed 55k times 24 I would like to use Matplotlib to generate a scatter plot with a huge amount of data (about 3 million points). Unfortunately, when using np.histogram I don't think there is any easy way to associate bins with individual data points.

Or, if you need to pay more attention to outliers, then perhaps you could bin your data using np.histogram, and then compose a delta_sample which has representatives from each bin. A scatter plot is a visual representation of how two variables relate to each other. Plt.scatter(delta,vf,c=dS,alpha=0.7,cmap=cm.Paired) So the easiest thing to do would be to take a sample of say, 1000 points, from your data: import randomįor example: import matplotlib.pyplot as plt carray-like or list of colors or color, optional The marker colors. sfloat or array-like, shape (n, ), optional The marker size in points2 (typographic points are 1/72 in.). Default is rcParams 'lines.markersize' 2. Parameters: x, yfloat or array-like, shape (n, ) The data positions. It'll show the dispersion of log run times for each bucket.Unless your graphic is huge, many of those 3 million points are going to overlap. Parameters: x, yfloat or array-like, shape (n, ) The data positions.

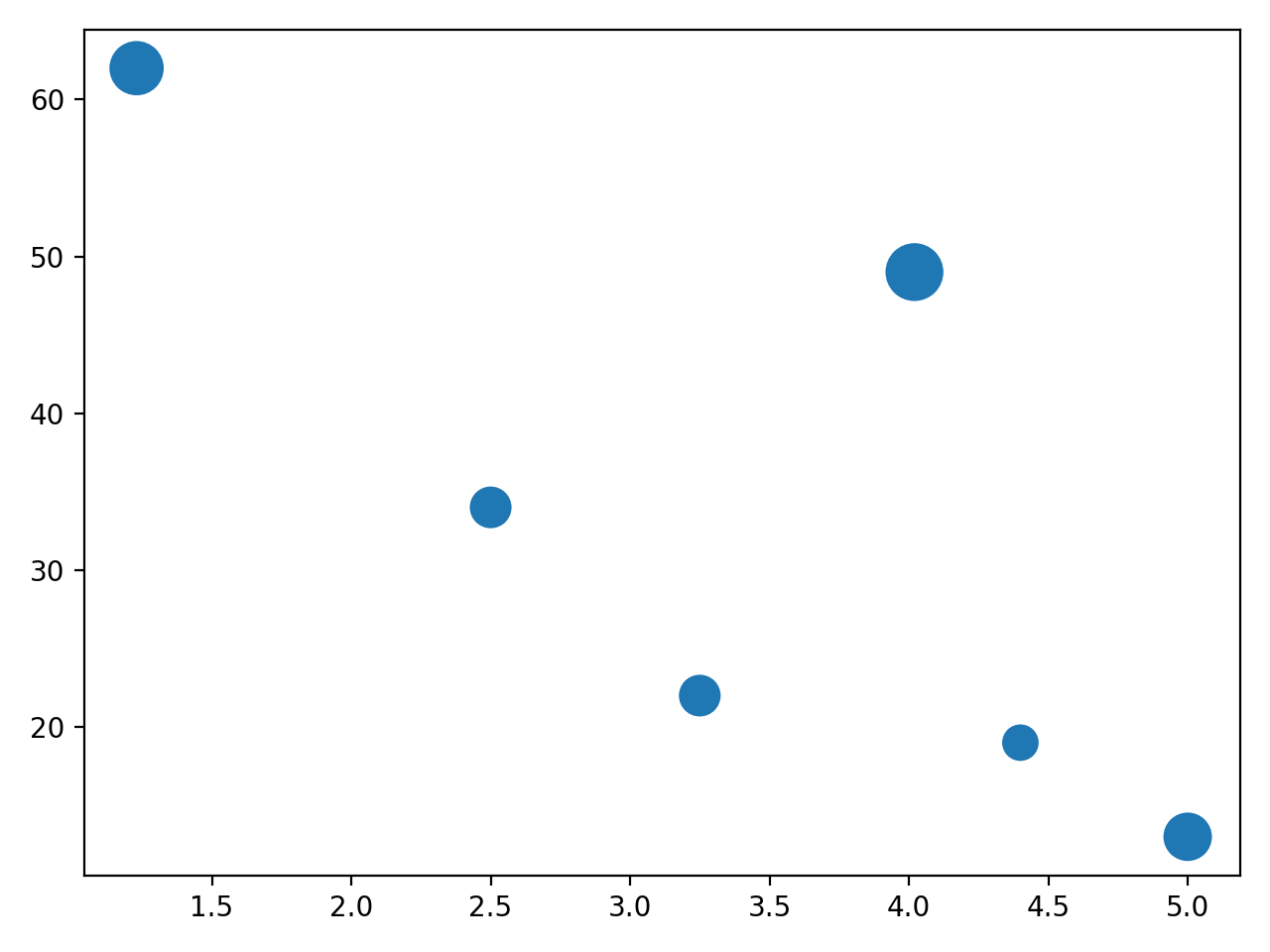

This will create the candle stick chart like shown below. Then for each bucket you put a candle stick. Next, you break the log of size $\ln s$ into buckets. This would be handled by log-log transform. for instance, say you're run time $t$ is $t=10s^3$, where $s$ is the size of the problem, then $\ln t=\ln 10+3\ln s$. While it doesnt matter as much for small amounts of data, as datasets get larger than a few thousand points, plt.plot can be noticeably more efficient than plt. The point is to get to some kind of a linear function, as they tend to be easier to present and consume.Īlso, you could bucket your data into bins. If you know theoretical run time function such as $O(n\ln n)$, then you can use this for transformation too. import matplotlib.pyplot as plt import numpy as np ('mpl-gallery') make the data np.ed(3) x 4 + np.random.normal(0, 2, 24) y 4 + np.random.normal(0, 2, len(x)) size and color: sizes np.random.uniform(15, 80, len(x)) colors np.random.uniform(15, 80, len(x. A scatter plot is a type of plot that shows the data as a collection of points.

If neither of these scatter plots show a linear pattern, then we need to think of something else, otherwise, you're good. for the latter try log transform only time.For the former try log transform both time and size, then scatter.

Hence, I'd first try to figure which is the case. The run time as a function of the size is usually polynomial (when you're lucky) or exponential. Create your own server using Python, PHP, React.js, Node.js, Java, C, etc.

0 kommentar(er)

0 kommentar(er)